NLP = Natural Language Processing

CALL = Computer-assisted Language Learning

NLP makes chunks to help businesses make money…

In another post, I described how I feel natural language processing algorithms and sentences chunking/parsing techniques are often designed to help businesses make money. Usually they are used by data scientists to parse large amounts of text for things like:

- financial analysis

- predictive search (Google autocomplete)

- spotting consumer trends

- analyzing “sentiment” (i.e. positive vs. negative product reviews)

- creating customer service AI “chatbots”

- voice recognition (Alexa, Siri).

Usually these tasks are achieved by doing the following:

- parsing sentences into “chunks” to extract useful information (noun phrases, actions, etc.)

- looking at connotations of words to determine how “positive” a text is

- using word frequency to make predictions

…not to help educators teach languages.

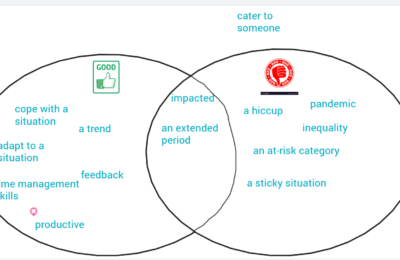

Most of the applications listed above accomplish their goals by extracting information from large amounts of text (customer reviews, news, financial news headlines, search histories, etc.). In doing this, they also parse sentences into noun phrases, verb phrases, and other such “chunks.” However, this chunking step is largely just a stepping stone on the way to the ultimate goal of finding useful information to help drive business decisions.

But it’s these “chunks” that hold some of the most useful information for language learnings: the subject of a sentence, the prepositions, the relationships between different noun phrases in a sentence, the collocates (words that commonly pair with other words, such as “emerging trend”, “positive step,” etc.).

Because educational uses of NLP are usually different than the traditional applications mentioned above, they usually help drive new research in NLP. That is, figuring out how to use NLP in TESOL may result in changing how NLP works. A simple example is training speech recognition and text-analysis algorithms to still work reliably when a student’s pronunciation or grammar is non-nativelike, or when their essays are shorter and contain many errors, as with younger learners.

For example, if we want NLP technology to run behind the scenes of an interactive online language learning app, it would need to have faster processing speed. This was something I ran into with the development of TextMix; the Python package I used (NLTK) could parse sentences sufficiently quickly to render them on the screen in “chunks,” but if the app were to chunk sentences with the user hovered the mouse over them, for example, it may not be fast enough to do this effectively.

Macro / top-down language learning applications of NLP

So far, I’ve only come across a few tools that use NLP for language learning, and they’re mostly to help automate the grading of essays to save teachers time, as described in this article.

Litman (2016) also describes the potential of NLP for tasks such as curating which forum posts need review by instructors of MOOCs.

She also explains how the educational applications of NLP are quite different from those for which NLP techniques were originally created (i.e. for business). For example, she states that “standard ‘proofreading’ tools do not focus on errors that are particularly important for language learners” (p. 4171). She describes the challenges of using NLP on student-created texts, as they are different from professionally-written texts like newspaper articles, and contain errors not typically made by native speakers.

Because educational uses of NLP are usually different than the traditional applications mentioned above, they usually help drive new research in NLP. That is, figuring out how to use NLP in TESOL may result in changing how NLP works. A simple example is training speech recognition and text-analysis algorithms to still work reliably when a student’s pronunciation or grammar is non-nativelike, or when their essays are shorter and contain many errors, as with younger learners.

For example, if we want NLP technology to run behind the scenes of an interactive online language learning app, it would need to have faster processing speed. This was something I ran into with the development of TextMix; the Python package I used (NLTK) could parse sentences sufficiently quickly to render them on the screen in “chunks,” but if the app were to chunk sentences with the user hovered the mouse over them, for example, it may not be fast enough to do this effectively.

Where are the bottom-up language learning applications of NLP? (focus-on-form, vocab instruction, etc.)

I did find an article on using NLP to give feedback to Japanese learners on their writing, but other than that my searching has come up dry.

…This post to be updated and continued within a few days…