With natural language processing techniques, text can be tokenized into sentences and words, and these can be “chunked” according to regular expression matching patterns. Python’s Natural Language Toolkit is one way of doing this.

We can chunk sentences into noun phrases, complex verbs, and prepositional phrases. We can also chunk things like relative clauses or phrasal verbs, but this can interfere with chunking other things.

In this post, I aim to:

- explore how different ways of chunking can be more or less pedagogically useful to learners.

- draw attention to the need for NLP techniques to be applied to language learning, not just parsing search queries or mining data from the internet to help businesses make more money.

- Share my app, ChunkReader, which automatically parses the chunks of text according to my (subjective) rules on how to make the most pedagogically-useful chunks!

Benefits of chunking

Chunking is a form of text enhancement (i.e. drawing the reader’s attention to something in the text, or making a grammar structure more noticeable by highlighting). This can be helpful for learners, because it may increase syntactic awareness (Park & Warschauer, 2016), has been correlated with retention of formulaic expressions (Nguyen, 2014), and increases reading processing efficiency (Fender, 2001; Pulido, 2021). Also, if chunks happen to be collocations and they are made more apparent in the text, they may be acquired as a single unit in place of individual words (Durrant & Schmitt, 2010; Conklin & Schmitt, 2012). Woah!

How chunking works (basically):

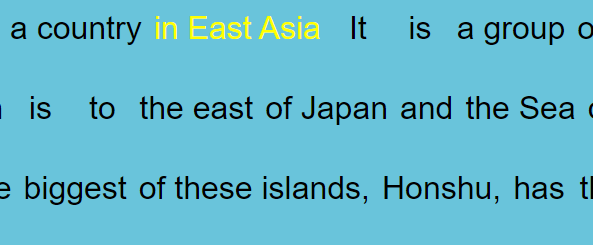

For example, if we want to chunk a verb phrase like may increase, we can set a rule to capture any number of verbs together.

If we want to chunk a phrasal verb like look up, we can set a rule to capture a verb + preposition.

These “rules” are written using modified regular expressions to define a “chunking grammar” in Python NLTK. More details are here.

When chunking becomes subjective:

But what if we have a sentence like this: The university admissions staff stated that enrollment may steadily increase over the next three years. Which method of chunking is most useful for learners?

- If we chunk for verb phrases, we get may steadily increase

- If we chunk for phrasal verbs, we get increase over (which isn’t really a phrasal verb)

- If we chunk for prepositional phrases, we get over the next three years

- If we choose all 3 together, we aren’t really chunking at all. We’d get may steadily increase over the next three years. That’s almost the whole sentence!

Method #1 is nice because it includes the collocation steadily increase.

Method #2 could confuse learners into thinking that increase is often followed by over, when it’s not really.

Method #3 gives us the nice collocation over the next ___ years, which is useful for learners to acquire as a “chunk.”

The issue is that we have to choose one method of chunking!

Introducing ChunkReader!

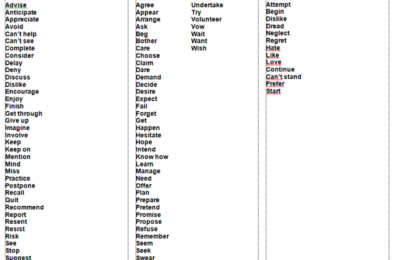

I created a simple app called ChunkReader that using Python’s NLTK to chunk sentences by a grammar I felt was most useful for learners. Here are the rules of this grammar:

| Syntactic unit | Chunking grammar |

| Prepositional phrase OR Noun phrase | (P*) DET* ADJ* N+ |

| Verb phrase OR infinitive | (to) V+ |

| Relative clause | that/which/who VP |

The abbreviations in the chunking grammar come from the Penn Treebank Project, which is used as the POS tagger. Here is the complete list of abbreviations.

It’s interesting to note that the rules for chunking which I arbitrarily chose aren’t necessarily useful for most other applications of NLP, such as parsing search queries to serve up relevant search results, or mining the internet for textual data to analyze in order to find trends in news or business. These chunking rules are designed to make the most useful chunks of language for students: mostly formulaic expressions, collocations, clauses, and the like. Of course, this is totally subjective. One might feel that beginner students could benefit from just chunking a sentence into subject, verb, and object. Maybe we just want to chunk phrasal verbs. It depends on the context and what’s most helpful to particular students.

And here is the app: ChunkReader Try it and let me know what you think! It’s still full of bugs.

References

Conklin, K. & Schmitt, N. (2012). The processing of formulaic language. Annual Review of Applied Linguistics, 32, 45–61. https://doi.org/10.1017/S0267190512000074

Durrant, P. & Schmitt, N. (2010) Adult learners’ retention of collocations from exposure. Second Language Research, 26(2), 163–188. http://doi.org/10.1177/0267658309349431

Fender, M. (2001). A review of L1 and L2/ESL word integration skills and the nature of L2/ESL word integration development involved in lower-level text processing. Language Learning, 51(2), 319–396.

Nguyen, H. (2014). The acquisition of formulaic sequences in high-intermediate ESL learners. Publicly Accessible Penn Dissertations. 1385. http://repository.upenn.edu/edissertations/1385

Pulido, M. F. (2021). Individual chunking ability predicts efficient or shallow L2 processing: Eye-tracking evidence from multiword units in relative clauses. Frontiers in Psychology, 11, 1-18. https://doi.org/10.3389/fpsyg.2020.607621

hi Brendon, nice idea, will keep an eye on chunkreader as it develops!

ta

mura